What are bots and why are they viewing my site?

If you’ve only just noticed bot traffic on your site – or if you’re new to the world of websites and analytics – you might be wondering what exactly bot traffic is. It’s a simple concept. Bots, or robots, are programs created by humans to do all the things that we don’t like doing. Mostly things like scraping the internet and collecting data that can be used for many (mostly useful) purposes.

So … are bots bad?

Short answer: it depends.

In some cases, bots provide helpful, essential services – like the crawling and indexing of your site done by Googlebot (Google’s web crawler). In other cases, they can be malicious, scraping your site for weakness.

Does bot traffic affect SEO?

Look. Bot traffic isn’t necessarily bad for SEO. But it can have side effects like slowing your site speed or causing website downtime that can harm your performance in the long run.

So you should block all bots from browsing your site, right?

Hold up, not so fast. Some bot traffic is incredibly important to your site’s SEO performance. While there are bad bots out there, there are equally good bots that are essential. So, while having high amounts of bot traffic could indicate that your site is at higher risk of threats if not secure, having no bot traffic shows that search engines aren’t crawling and indexing your site. And that’s a recipe for tanked ratings.

Am I blocking good bots?

If you’ve just built a new site or web page and are struggling to get it ranking, make sure you’re not blocking search engine bots. You can check whether you’re blocking these bots by first checking your robots.txt file for rules that are preventing search engine bots from entering your site. Then, head to your homepage or new page, type Ctrl +U (Command + Option + U on Mac) and search for ‘noindex’. This is the tag that blocks search engines from indexing your site. If you find that you’re blocking bots and want to know how to allow them, here’s a helpful article on the Noindex Tag from Ahrefs.

Okay. So what are the bad bots and how do I deal with them?

So you now know that most bots will simply crawl your site. But some bots are malicious and only exist to disrupt your business. They can do this in many ways, but the four biggest threats are: malicious web scraping bots, Denial-of-Service (DoS) bots, spam bots and click fraud bots.

Malicious web scraping bots

If we’ve learnt anything from Batman, it’s that there are people out there who just want to watch the world burn. A large portion of these people are cyber criminals. Some cyber criminals have built armies of malicious bots to scrape your site, looking for ‘exploits’ they can use to penetrate your site’s security. These exploits are often found in out-of-date plugins or broken scripts. Weaknesses can be quickly found and abused by hackers – which is why you need be sure that you’re keeping your site and its features up-to-date.

How to deal with malicious web scraping bots

The best way to keep your site safe from abuse by web scraping bots is to get managed website hosting and support, where your service provider keeps your site up-to-date and secure for you. If you’re interested in managed hosting and support, get in touch with us to discuss the right plan for your business.

Denial-of-Service bots

If your site is constantly going down due to surges in traffic, you could be the target of an onslaught of Distributed Denial-of-Service (DDoS) bots. Sent to your site by a third party, DDoS bots work by repeatedly completing resource-intensive tasks until your server starts to struggle. This can cause slowdowns and sometimes a complete server crash, during which your site will go down completely. It’s not pretty.

How to deal with Denial-of-Service bots

As with most security threats, the best thing you can do to prevent an attack is to keep your site up-to-date, minimising the risk of an exploit being found on your website. Get in touch if you’re having issues with your website’s security – we have a great hosting and management plan that will keep your site safe.

We also recommend using a Content Delivery Network (CDN), like Cloudflare, that’s designed to be better at handling large amounts of traffic. If you don’t want to use a CDN, we recommend you use a caching plugin like WP Rocket or build a static site using something like Gatsby. This will serve static pages opposed to PHP (server side rendered pages, reducing server load).

Spam bots

Every day, your website deals with massive amounts of potential traffic. Your ability to manage your paying customers looking for your services largely comes down to your ability to respond to inquiries. And it’s frustrating trying to respond to legitimate inquiries when you’re wading through spam that’s come in through your contact forms. While that’s just one of the many types of spam botting, it is the most frustrating for Australian business owners, as it significantly slows down their inquiry response times.

How to deal with spam bots

Good news. Spam bots are way easier to manage than many other forms of malicious bots. You can successfully block the majority of bots using software that detects and blocks bot traffic.

You can block bots site-wide using a security plugin like Wordfence that will detect and block bad traffic. And you can block spam bots from using forms by fighting fire with fire: reCAPTCHA by Google works by combining a risk-based algorithm with machine learning (you guessed it – a bot). If your website is using the WordPress CMS, here’s a guide on how to add reCAPTCHA to your site if you are using gravity forms.

Reduce the number of bots spamming your emails by obscuring your email address on your website using a function like ‘antispambot’, which converts email addresses into HTML.

It’s important to remember that of all the ways spam bots can attack your site, forms are the biggest open door for abuse. So, we recommend you take some or all of the following steps to protect your site.

- Remove forms that are not needed.

- Avoid file upload functionality if possible.

- Secure your form submissions by using reCAPTCHA (‘I’m not a robot’ checks).

- Use third party spam filter services like Akismet to filter form submissions before they get to you.

Click fraud bots

The biggest threat to Pay Per Click advertising is a little something called click fraud. It’s the act of clicking ads with the goal of blowing the ad budget of the victim. The simplicity of this task makes it the perfect subject for bot programmers, who design programs that can target a specific ad and repeatedly click it until it no longer appears in search results.

How to deal with click fraud

The good news is, you probably don’t have to worry too much about click fraud – at the moment. We haven’t seen it affect our clients yet. However, it’s quickly becoming more prevalent, which is why Distl is getting prepared, and you should be to. We’ve researched a whole host of solutions, and what we’ve found is that most operate using advanced AI to monitor the behavior and IP address of users clicking your ad. If they’re detected as fraudulent, they’re added to Google’s IP exclusion list, so they can’t view your ads in search results anymore

If you’re worried about click fraud, one of the better known providers of click fraud protection, ClickCease, shared the 5 most common industries click fraud bots target.

They are:

- Photography – 65%

- Pest Control – 62%

- Locksmith – 53%

- Plumbing – 46%

- Waste Removal – 44%

How to identify and remove bot traffic in Google Analytics

If your traffic is significantly higher than normal, it’s likely that a large portion of it is bot traffic. And we’re guessing that this is affecting your reporting. Here’s how you can identify and remove bot traffic from analytics.

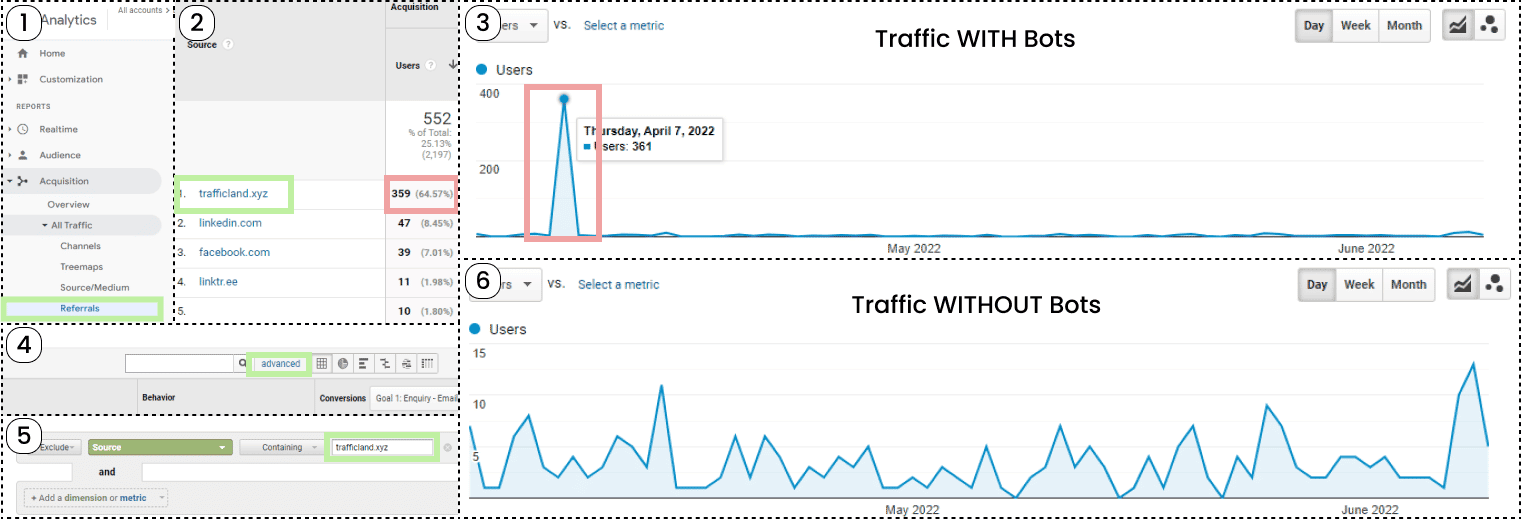

The following steps match the numbers in the image above.

- Head over to your Google Analytics dashboard and look for the acquisition tree in the left-hand panel. Within this menu, you will find All Traffic > Referrals. Click referrals, and you will find where all your visitors are coming from.

- In the referral dashboard, look at your traffic statistics for the past 2-3 months. If you have bot traffic, you should see an obvious outlier.

- Next, take a look at your chart. Assuming your site sees a volume consistent with a normal service-based business’ website, you should see a large spike which flattens your other website traffic.

- To filter the traffic, click the ‘advanced’ button in the filters toolbar. You’ll open a filter tool that you can use to remove traffic with your chosen properties.

- Using the drop-downs, select exclude, source, and containing. In the input field, type the name of your referring bot traffic, then hit apply.

- Your traffic graph should now look consistent with that of a normal traffic chart, as seen above in Image 5.

All too confusing? Here’s a bot summary.

Our list of good bots and bad bots to keep it simple.

Good bots

Search engine bots: Googlebot, Bingbot, Baidu Spider, and Slurp (Yahoo!)

These bots crawl and index content from websites across the internet so that they can understand and recommend your business to customers looking for your services. They are essential to the service that search engines offer.

Marketing tool bots: Semrushbot (Semrush) and AhrefsBot (Ahrefs)

Marketing tool bots crawl the internet for link data that provides crucial insights to digital marketers around the world. This information can then be used to better understand your customers and serve them better.

Aggregator bots and price comparison bots:

Web crawlers that scrape the internet looking for relevant content such as reviews and deals that they can on-sell to users looking for goods and services. These bots help you find great deals and help you filter the countless services on the web to find the best match for your needs.

Bad bots

Click fraud bots:

Click fraud bots have one job: to deplete the marketing budget of online businesses. They work by clicking your Google Ads or any other form of Pay Per Click advertising that you may be running.

Spam bots:

Spam bots run automated tasks across the internet: filling out forms, posting comments and sending emails to sites across the web hoping to disrupt business or promote services.

DDoS bots (Distributed Denial-Of-Service Bots):

DDoS bots maliciously flood your site and carry out resource-intensive tasks to intentionally slow down or completely stop your website server’s operation.

Scraping bots:

Scraping bots crawl the internet for vulnerabilities, hoping to penetrate your security, steal your customer data and infect your website with malware by exploiting out-of-date plugins and other holes that may exist in your security.